Combined verification for the safety of autonomous driving

How can the verification of neural networks and of traditional software components be combined to provide assurance evidence for systems comprising both types of components?

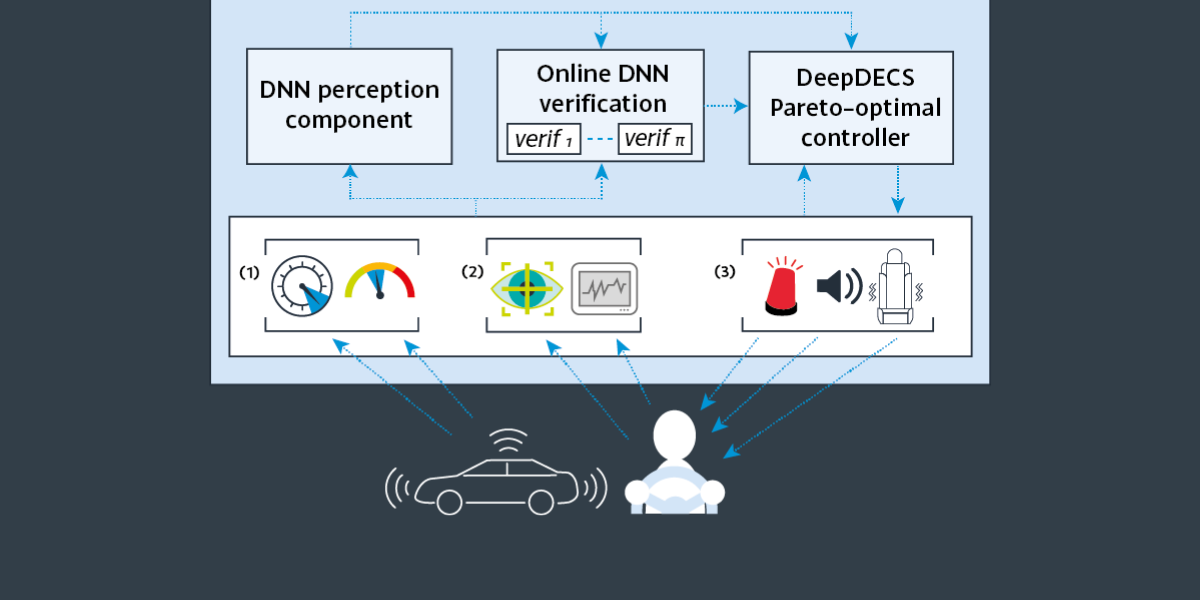

Like many safety-critical autonomous systems, the driver-attentiveness management solution prototyped by Safe-SCAD combines conventional software and deep learning components. Integrating the two paradigms is essential for ensuring that Level 3 autonomous car drivers retain sufficient situational awareness to safely take over the driving task when the car approaches traffic conditions outside its operational design domain. Driver biometrics and car parameters collected by specialised sensors are fed into a deep neural network (DNN) responsible for predicting the driver’s response time to a potential control takeover request from the car. Based on the DNN predictions, a conventional software controller issues visual, acoustic and/or haptic alerts when the driver is deemed overly distracted.

As traditional verification methods cannot provide safety guarantees for systems mixing conventional software and deep-learning components, the use of DNN perception within our solution posed major challenges for its assurance. We overcame them using Deep-learning aware Discrete-Event Controller Synthesis (DeepDECS), a novel hybrid verification approach co-developed with colleagues from the AAIP research pillar, Safety assurance of decision making in autonomous systems (SADA). DeepDECS uses a combination of DNN verification methods – both when the autonomous system is developed, and during its operation:

- At development time, DNN verification is used to quantify the aleatory uncertainty of the deep learning perception, so that traditional verification methods can synthesise conventional software controllers aware of the DNN-perception uncertainty.

- In operation, online DNN verification associates trustworthiness levels with deep-learning predictions, enabling the controllers of autonomous systems to react confidently to trustworthy DNN outputs, and conservatively to DNN outputs that cannot be trusted.

Professor Radu Calinescu

Professor of Computer Science

University of York

PI of the Safe-SCAD project

Contact us

Centre for Assuring Autonomy

assuring-autonomy@york.ac.uk

+44 (0)1904 325345

Institute for Safe Autonomy, University of York, Deramore Lane, York YO10 5GH

Contact us

Centre for Assuring Autonomy

assuring-autonomy@york.ac.uk

+44 (0)1904 325345

Institute for Safe Autonomy, University of York, Deramore Lane, York YO10 5GH