Sense-Assess-Explain (SAX): Building trust in autonomous vehicles in challenging real-world driving scenarios

The challenge

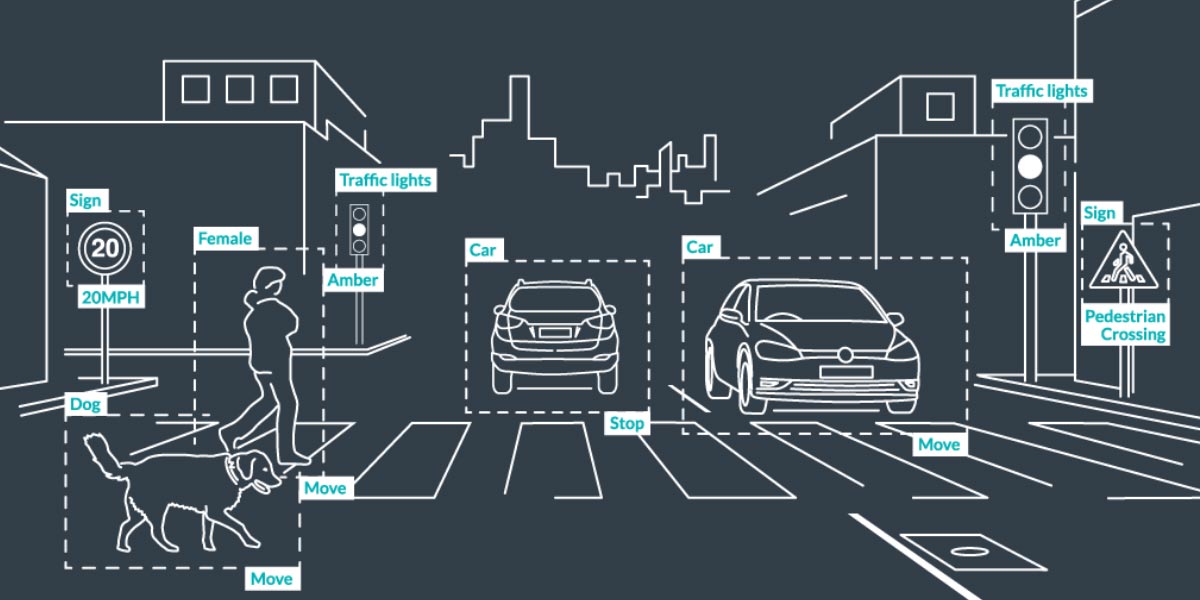

Understanding the decisions taken by an autonomous system is key to building public trust in robotics and autonomous systems (RAS). This project addressed this issue of explainability by researching autonomous systems that can:

- sense and fully understand their environment

- assess their own capabilities

- provide causal explanations for their own decisions

The research

In on-road and off-road driving scenarios, the project team studied the requirements of explanations for key stakeholders (users, system developers, regulators). These requirements informed the development of algorithms that generate causal explanations.

The work focused on scenarios in which the performance of traditional sensors (e.g. cameras) significantly degrades or completely fails (e.g. in harsh weather conditions). The project developed methods that can assess the performance of perception systems and adapt to environmental changes by switching to another sensor model or a different sensor modality. For the latter, alternative sensing devices (incl. radar and acoustic sensors) were investigated in order to guarantee robust perception in situations when traditional sensors fail.

The results

This project presents a universal view of AV sensing requirements and how uncommon sensing modalities can be suitable for overcoming challenging operational scenarios.

The team showed that scanning radar is a tool that can rival vision and laser in scene understanding across all crucial autonomy-enabling tasks. They present an overview of AV training requirements and approaches to tackle the lack of specific sensing combinations or labels. They released a vast dataset in a variety of scenarios and conditions, comprising a full sensor suite and manually-annotated labels for odometry, localisation, semantic segmentation and object detection.

Different types of explanations were identified in challenging driving scenarios. The team characterised several dimensions for explanations and identified different stakeholders for which explanations are relevant. Furthermore, they developed methods and provide guidance for generating explanations using vehicle perception and action data in dynamic driving scenarios.

Body of Knowledge guidance from the project

- 2.2.1.1. Defining sensing requirements

-

2.2.1.2 Defining understanding requirements

-

2.3 Implementing requirements using machine learning (ML)

-

2.6 – Handling change during operation

-

2.8 Explainability

- 3 Understanding and controlling deviations from required behaviour

Papers and presentations

- Gadd, M., De Martini, D, and Newman, P. “Contrastive learning for unsupervised radar place recognition,” in IEEE International Conference on Advanced Robotics (ICAR), December 2021

- Omeiza, D., Webb, H., Jirotka, M., and Kunze, L. “Explanations in autonomous driving: a survey”, IEEE Transactions on Intelligent Transportation Systems, 2021

- Broome, M., Gadd, M., De Martini, D., and Newman, P. “On the Road: Route Proposal from Radar Self-Supervised by Fuzzy LiDAR Traversability,” AI. Multidisciplinary Digital Publishing Institute (MDPI), November 2020

- Williams, D., Gadd, M., De Martini, D., and Newman, P. “Fool me once: robust selective segmentation via out-of-distribution detection with contrastive learning,” in IEEE International Conference on Robotics and Automation (ICRA), 2021. Preprint available.

- Omeiza, D., Kollnig, K., Web, H., Jirotka, M., and Kunze, L. "Why not explain? Effects of explanations on human perceptions of autonomous driving: a user study". 2021 IEEE International Conference on Advanced Robotics and its Social Impacts (ARSO), 2021

- Omeiza, D., Web, H., Jirotka, M., and Kunze, L. "Towards accountability: providing intelligible explanations in autonomous driving". 2021 IEEE Intelligent Vehicles Symposium (IV), 2021

- De Martini, D., Gadd, M., and Newman, P. "kRadar++: Coarse-to-Fine FMCW Scanning Radar Localisation", in Sensors, Special Issue on Sensing Applications in Robotics, vol. 20, no. 21, p. 6002, 2020. Multidisciplinary Digital Publishing Institute (MDPI), October 2020

- Gadd, M., De Martini, D., Marchegiani, L., Newman, P., Kunze, L. “Sense-Assess-eXplain (SAX): Building Trust in Autonomous Vehicles in Challenging Real-World Driving Scenarios,” in Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Workshop on Ensuring and Validating Safety for Automated Vehicles (EVSAV), (Las Vegas, NV, USA), October 2020

- Williams, D., De Martini, D., Gadd, M., Marchegiani, L., and Newman, P. “Keep off the Grass: Permissible Driving Routes from Radar with Weak Audio Supervision,” in IEEE Intelligent Transportation SystemsConference (ITSC), (Rhodes, Greece), September 2020

- Kaul, P., De Martini, D., Gadd, M., Newman, P. “RSS-Net: Weakly-Supervised Multi-Class Semantic Segmentation with FMCW Radar,” in Proceedings of the IEEE Intelligent Vehicles Symposium (IV), June 2020

- Gadd, M., De Martini, D., Newman, P. “Look Around You: Sequence-based Radar Place Recognition with Learned Rotational Invariance” in IEEE/ION Position, Location and Navigation Symposium (PLANS), April 2020

- Barnes, D., Gadd, M., Murcutt, P., Newman, P., and Posner, I. The Oxford Radar RobotCar Dataset: A Radar Extension to the Oxford RobotCar Dataset, in Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), 2020.

- Saftescu, S., Gadd, M., De Martini, D., Barnes, D., and Newman, P. Kidnapped Radar: Topological Radar Localisation using Rotationally-Invariant Metric Learning, in Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), 2020.

- Tang, TY., De Martini, D., Barnes, D., and Newman, P. RSL-Net: Localising in Satellite Images From a Radar on the Ground, in IEEE Robotics and Automation Letters (RA-L) 2020

- Dr Lars Kunze co-organised the 2nd Workshop on 3D-Deep Learning for Automated Driving at the IEEE Intelligent Vehicles Symposium (IV’2020) - Las Vegas, NV, United States, June 2020.

- Dr Lars Kunze is a co-author of Marina Jirotka’s talk “Towards Responsible Innovation in Autonomous Vehicles” at the Driverless Futures? research workshop on “The politics of autonomous vehicles”, 16-17 December 2019, University College London.

Project partners

- Oxford Robotics Institute (ORI) at the University of Oxford